OpenAI's Assistants API is Deprecated: Migrate to the New Responses API

Updated

TL;DR

With the introduction of the new Responses API (which is an upgraded version of the Chat Completions API), OpenAI plans to sunset the Assistants API with effect from 26th August, 2026. The Chat Completions API will still be supported, but OpenAI recommends to use the new Responses API for all upcoming projects.

Originally, the Assistants API was created for sophisticated tool calling and execution for agentic workflows, but it also supported thread-like persistent chats which was a huge help in maintaining chats and their context.

Here's a step-by-step tutorial to migrate the existing Chat Completions and Assistants API code to the Responses API. For that, let me lay out the differences and the transition between the concepts of these two APIs.

Table of Contents

Chat Completions >> Responses

If your application only uses the Chat Completions API for stateless, single-turn interactions, your migration path is straightforward.

A response when used alone, also acts as a stateless entity which outputs according to the input.

// Javascript SDK ---

const context = [

{ role: 'system', content: 'You are a generalist.' },

{ role: 'user', content: 'hey there' }

];

const completion = await client.chat.completions.create({

model: 'gpt-5',

messages: messages

});

const response = await client.responses.create({

model: "gpt-5",

input: context

});

// API Endpoints ---

// Chat Completions

POST /v1/chat/completions

// Responses

POST /v1/responsesIf using structured outputs by specifying response_format and zodResponseFormat:

// Chat Completions API

response_format: zodResponseFormat(diffPayloadSchema, "json_diff_response")You now have to specify it as shown below:

// Responses API

text: {

format: {

type: "json_schema",

name: "json_diff_response",

schema: zodResponseFormat(diffPayloadSchema, "json_diff_response").json_schema.schema,

},

},Assistants API >> Responses API

Assistants API

The core architecture of the Assistants API revolved around four key entities: Assistants (configuration), Threads (messages), Runs (execution), and Run Steps (intermediate actions).

- 1. Assistant - The persistent object defining configuration. It bundled the core settings like the target model, system instructions, and tools (e.g., code_interpreter, file_search).

- Limitation - Management/Versioning was often cumbersome as it was defined programmatically.

- 2. Thread - The container for the conversation session. It stored the server-side conversation history, but strictly consisted of only messages.

- Limitation - Limited storage capability, only storing messages.

- 3. Run - The asynchronous process responsible for executing the Assistant's actions against a Thread.

- Limitation - Required complex asynchronous polling loops. Could enter states like

requires_actionfor tool execution, complicating client-side orchestration. The Thread was locked while a Run was in progress.

- Limitation - Required complex asynchronous polling loops. Could enter states like

- 4. Run Steps - Detailed internal objects tracking the progress and intermediate actions of the Run.

- Limitation - Generalized objects tied to the complex, asynchronous lifecycle of the Run, necessitating checks and event handling for tracking progress.

The Assistants API was always in beta, in other words, it never transformed into something concrete. Here's how the Assistant API looks when implemented in javascript with the help of OpenAI's SDK.

Assistants API Flow

- 1. Assistant Creation

import OpenAI from "openai";

const openai = new OpenAI({

apiKey: import.meta.env.OPENAI_API_KEY,

});

const assistant = await openai.beta.assistants.create({

model: "gpt-4.1-2025-04-14",

name: "threadAssistant",

instructions: "Provide output in markdown format."

})- 2. Thread Creation

const thread = await openai.beta.threads.create()- 3. Adding a Message to the Thread

const newThreadMessage = await openai.beta.threads.messages.create(

threadId,

{

role: "user",

content: message,

}

)- 4. Running a thread by creating a Run

const run = openai.beta.threads.runs.stream(

threadId,

{

assistant_id: assistantId

}

);- 5. Listening to Run Events (Steps)

// while streaming is enabled

run.on("messageCreated", (message) => {

// ...

});

run.on("textDelta", (textDelta) => {

// ...

});

/** ... ... ... **/

run.on("end", () => {

// ...

});

run.on("error", (err) => {

// ...

});

Responses API

The Responses API changes this flow a bit and gives new names to the core architectural entities of the Assistants API.

Assistants -> Prompts

Threads -> Conversations

Runs -> Responses

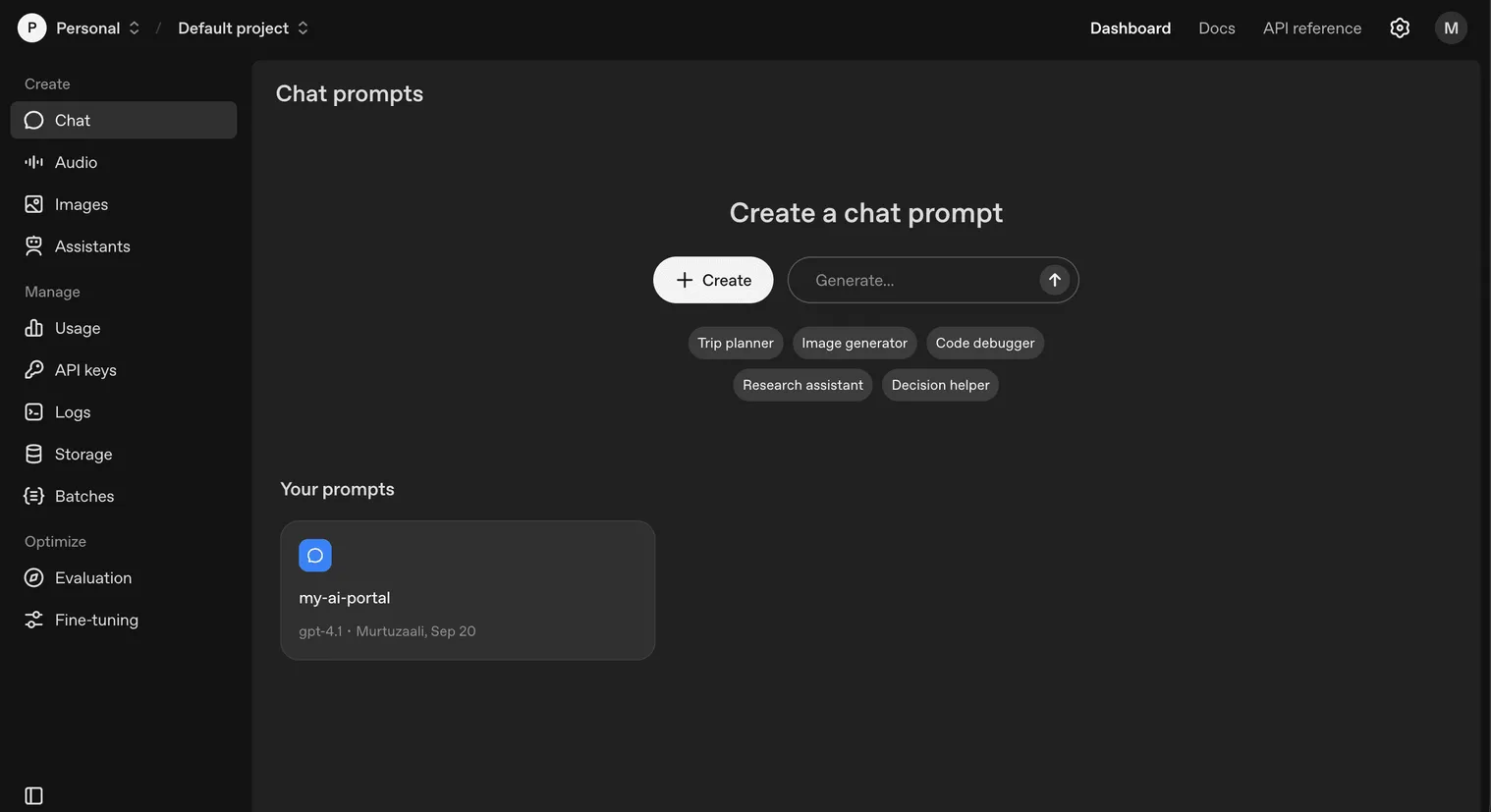

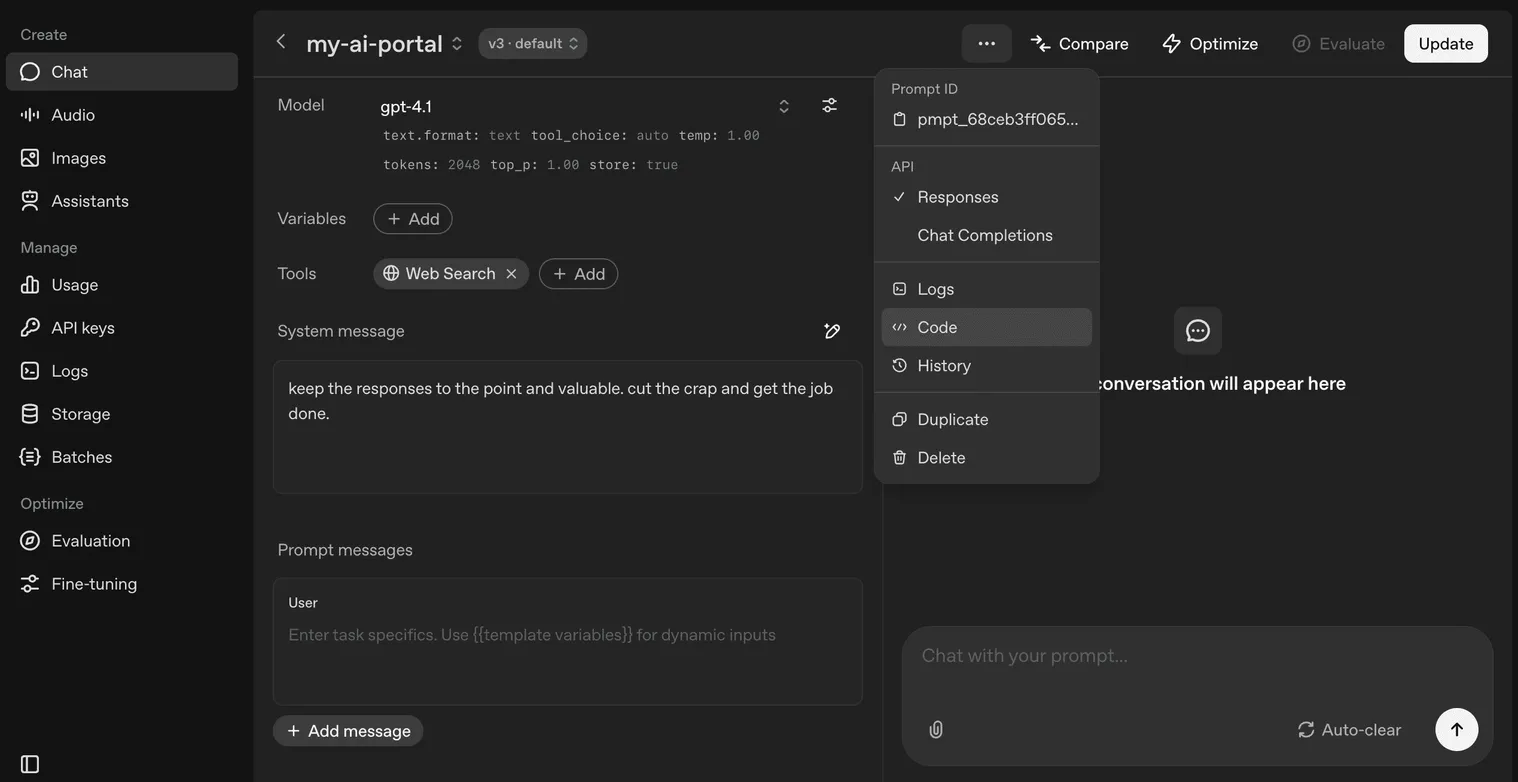

Run-Steps -> Items- 1. Prompt - Prompts are designed to hold configuration like model choice, tools, and system guidance (instructions), allowing them to focus purely on high-level behavior and constraints. They can strictly be accessed only from the Dashboard, and there's no programmatic way to create them. You can grab the ID of the prompt to reference it from code. One awesome thing about them is in-built versioning support.

- 2. Conversation - Conversations store generalized objects called Items, which represent a stream of data beyond just text messages, including tool calls, tool outputs, and other information. While creating a response, you can specify the conversation ID and the context and state of the conversation will be accessible and maintained.

- 3. Response - The cumbersome asynchronous Run process is replaced by the simpler Response primitive. You send input items and get output items back, unifying the execution process. As per OpenAI, Responses benefit from lower costs due to substantially improved cache utilization (showing a 40% to 80% improvement in internal tests over Chat Completions).

Responses API Flow (For Persistent Conversations)

- 1. Create a Prompt through the Dashboard

Source: platform.openai.com

- 2. Create a Conversation

import OpenAI from "openai";

const openai = new OpenAI({

apiKey: import.meta.env.OPENAI_API_KEY,

});

const thread = await openai.conversations.create();- 3. Create a Response

const run = await openai.responses.create(

{

prompt: {

id: "<prompt_id_from_dashboard>"

},

input: [

{

role: "user",

content: message,

}

],

conversation: "<conversation_id>",

store: true,

stream: true,

}

)Note that the prompt is configurable using the Dashboard, so when you specify the prompt_id, it will refer to the configuration of the prompt created via the dashboard, but if you want you can also override properties through code as well.

However, the input property extends, meaning, there are message input fields in the dashboard which allow you to define some default messages to start a conversation as well as a system message. And, the user message you send from the code gets concatenated to the messages defined in the prompt.

Source: platform.openai.com

- 4. Iterate over Response Events

// while streaming is enabled (stream: true)

const run = await openai.responses.create(

{

/** */

stream: true,

}

)

for await (const event of run) {

if (event.type === "response.output_text.delta") {/** */}

if (event.type === "response.output_item.added") {/** */}

if (event.item.type === "message" && event.item.status === "in_progress") {/** */}

/** ... */

if (event.type === "response.failed") {/** */}

if (event.type === "error") {/** */}

if (event.type === "response.completed") {/** */}

}Thoughts

The Assistants API was a huge help in bringing thread-like conversations to life and it worked to some extent. Now, OpenAI has decided to revamp and improve upon it in the form of the Responses API. As mentioned before, the Assistants API is deprecated and will be abandoned with effect from 26th August, 2026, so now is the time to plan a migration to the Responses API.